Top 5 Methods to Remove Duplicate Words from a List

Table of Contents

As a computer science engineer, I often deal with large amounts of text data. One common task in text processing is removing duplicate words from a list. This task looks simple but is crucial for improving data quality. Whether you are cleaning up user input, processing documents, or working with logs, you need clean and unique data.

In this guide, I will show five simple methods to remove duplicate words. These methods are easy to use in code and can be applied in most programming languages. I will also provide practical examples and tips for each method.

Why Removing Duplicate Words Matters

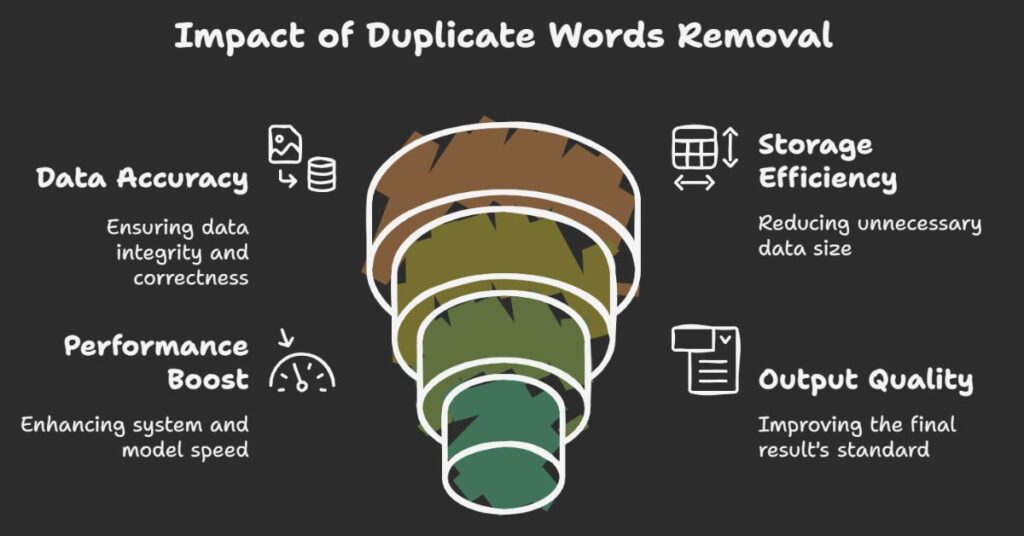

Duplicate words affect data accuracy. They increase storage size, reduce performance, and lower the quality of the final output. In machine learning, for example, clean input makes models more accurate. In content writing, repeated words affect readability and SEO. Removing duplicates helps avoid these problems.

Method 1 – Using Sets

How It Works

A set is a collection of unique elements. In most programming languages like Python, Java, or JavaScript, sets ignore duplicates. You can convert a list of words into a set and get unique words.

Example (Python)

words = "apple banana apple orange banana"

unique_words = list(set(words.split()))

print(" ".join(unique_words))

Output

orange banana apple

When to Use

Use this method when the word order is not important. Sets do not preserve order.

Method 2 – Using Dictionaries

How It Works

Dictionaries store key-value pairs. In modern Python (3.7+), they keep insertion order. This method removes duplicates while preserving order.

Example (Python)

words = "apple banana apple orange banana"

unique_words = list(dict.fromkeys(words.split()))

print(" ".join(unique_words))

Output

apple banana orange

When to Use

Use this method when you need to keep the original order of words.

Method 3 – Loop and Check

How It Works

Use a loop to check if each word is already in a new list. If not, add it. This method works in all programming languages.

Example (JavaScript)

let input = "apple banana apple orange banana";

let words = input.split(" ");

let uniqueWords = [];

words.forEach(word => {

if (!uniqueWords.includes(word)) {

uniqueWords.push(word);

}

});

console.log(uniqueWords.join(" "));

Output

apple banana orange

When to Use

Use this method in any language where sets or dictionaries are not available.

Method 4 – Using Regular Expressions

How It Works

Regular expressions help match repeating patterns. This method is useful when working with complex input.

Example (Python with Regex)

import re

words = "apple banana apple orange banana"

unique_words = []

for word in re.findall(r'\b\w+\b', words):

if word not in unique_words:

unique_words.append(word)

print(" ".join(unique_words))

Output

apple banana orange

When to Use

Use this method for advanced string filtering or input with special characters.

Method 5 – Online Tools or Script-Based Interfaces

How It Works

Online tools let users paste input and get the cleaned list instantly. These tools run scripts in the background. Users don’t need to write code.

Example Use

You paste:

apple banana apple orange banana

You get:

apple banana orange

When to Use

Use this method if you are not writing code or want a quick solution. Many websites offer free text utilities.

Best Practices for Removing Duplicate Words

- Always trim white spaces: Clean each word before checking duplicates.

- Convert to lower or upper case: This avoids case-sensitive mismatches like Apple vs apple.

- Preserve order when needed: Use methods like dictionaries or custom loops.

- Avoid nested loops in big data: They slow down performance.

- Test with sample data: Check output before applying to real input.

Common Use Cases

- Text cleanup for SEO: Remove duplicate keywords to improve clarity.

- Log file analysis: Ensure each event is processed once.

- Form input validation: Clean up repeated user entries.

- Data pre-processing for AI: Feed clean and consistent inputs to models.

- Content optimization: Improve user experience and readability.

Conclusion

Removing duplicate words is a small task but brings big improvements in data quality. Each method has a use case. Sets work fast but do not keep order. Dictionaries and loops are better for ordered results. Regular expressions help in special cases. Online tools offer fast and easy results.

Pick a method that fits your needs. Always test with real input before use. Clean input saves time, improves results, and ensures better output.

Leave a Reply